AI Hardware and LLM Trends Converge

AI hardware innovation accelerates with new infrastructure and massive orbital data centers.

AI Hardware and LLM Trends Converge

The relentless march of artificial intelligence is spurring groundbreaking developments in both AI model capabilities and the underlying hardware infrastructure. Recent reports highlight a dual focus: optimizing existing hardware for complex AI tasks and exploring radical new approaches to data center design.

Optimizing AI Chip Synergy

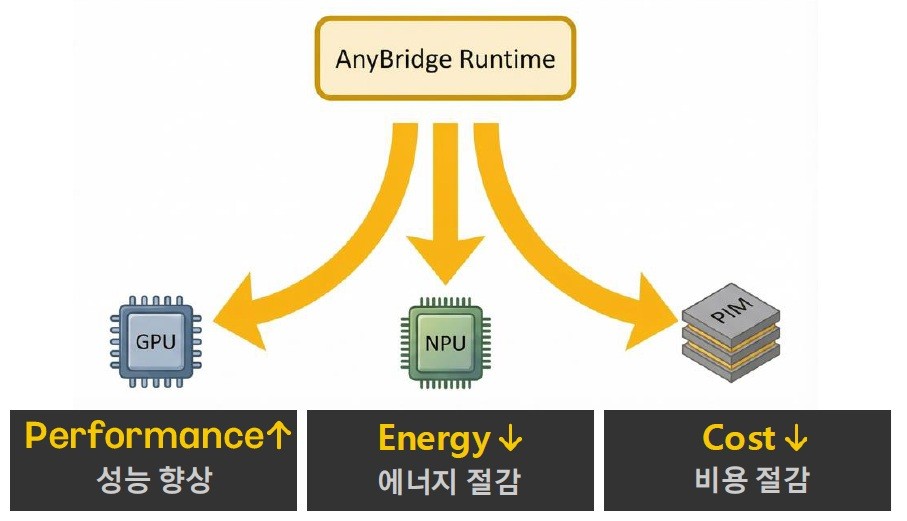

South Korea's KAIST has unveiled a novel infrastructure software designed to orchestrate diverse AI semiconductor chips, including GPUs, NPUs, and PIMs. This initiative, led by Professor Jongse Park's AniBridge AI team and recognized with an award at the '4 Major Science Institutes x Kakao AI Incubation Project,' aims to enhance the efficiency of large language models (LLMs). The key implication here is a potential reduction in reliance on expensive, power-hungry GPUs. By intelligently distributing workloads across specialized chips, this approach promises more agile and cost-effective AI deployments, which is crucial as LLMs continue to grow in complexity and demand.

This development arrives as major tech players push the boundaries of AI model performance. Google recently announced Gemini 3.1 Pro, an upgrade explicitly designed for "complex problem-solving." Unlike its predecessors, Gemini 3 Pro and Gemini 3 Flash, the 3.1 Pro version targets scenarios where simple answers are insufficient, indicating a move towards more nuanced and analytical AI capabilities. The significance lies in the symbiotic relationship: more capable AI models necessitate more sophisticated and efficient hardware, and vice-versa. Google's continued iteration on its Gemini line underscores the intense competition and rapid pace of AI model advancement.

Radical Visions for AI Data Centers

Beyond optimizing current hardware, ambitious visions for AI data centers are emerging, albeit with significant caveats. Elon Musk's announcement of merging SpaceX and xAI to launch a constellation of one million satellites for orbital data centers presents a futuristic, albeit controversial, prospect. The concept aims to leverage the vastness of space for processing power, potentially bypassing terrestrial limitations. However, the environmental implications are a major concern, with potential risks of contributing to space debris and light pollution, as highlighted by Engadget. This radical proposal underscores the immense computational demands of future AI, pushing engineers to consider entirely new paradigms for data storage and processing, even if the feasibility and sustainability remain heavily debated.

Future Outlook

The convergence of advanced AI model development and innovative hardware solutions will continue to define the technological landscape. We can expect further breakthroughs in heterogeneous computing, where specialized chips work in concert, driven by the need for more efficient LLM training and inference. Simultaneously, the boldest proposals for data center infrastructure, like orbital facilities, will likely spur debate and research into sustainable, large-scale AI computation. The trajectory points towards increasingly powerful AI, demanding equally innovative solutions to power and manage it, balancing performance with environmental responsibility.

References

Related Posts

Ukraine War Stalemate: Diplomacy Fails Amidst Frontline Trauma

Analysis of Russia-Ukraine war coverage: diplomatic deadlock and soldier's plight.

2026년 2월 20일Apple's Modem Hiccup & AI's Advance Shake Up Mobile

Apple's C1X modem issue, WhatsApp group history, and Gemini 3.1 Pro reveal mobile tech's evolving landscape.

2026년 2월 20일Google Pixel 10a: Mid-Range Contender Arrives March 5

Google launches the Pixel 10a, pre-orders open for $499/$500 device shipping March 5.

2026년 2월 19일